I run into this "problem" every now and then and every single time my initial thoughts have basically been... What problem? What the hell is wrong with these people?-)

The dictionary definition of the "problem" I suppose is that any knowledge which we arrive by inductive reasoning, that is by empirical observation and the assumption that past is indicative of future, is without justification. Or as a theist would put it, science can't (supposedly) justify itself. But then, neither can theism or anything else for that matter which kind of makes the statement as such stupid. It should have then simply been that nothing can be (absolutely) justified and science has nothing to do with it.

From wikipedia: "In inductive reasoning, one makes a series of observations and infers a new claim based on them. For instance, from a series of observations that a woman walks her dog by the market at 8am on Monday, it seems valid to infer that next Monday she will do the same, or that, in general, the woman walks her dog by the market every Monday. That next Monday the woman walks by the market merely adds to the series of observations, it does not prove she will walk by the market every Monday. First of all, it is not certain, regardless of the number of observations, that the woman always walks by the market at 8am on Monday. In fact, Hume would even argue that we cannot claim it is "more probable", since this still requires the assumption that the past predicts the future. Second, the observations themselves do not establish the validity of inductive reasoning, except inductively."

And: "Deductive reasoning (top-down logic) contrasts with inductive reasoning (bottom-up logic) in the following way: In deductive reasoning, a conclusion is reached from general statements, but in inductive reasoning the conclusion is reached from specific examples."

Deductive reasoning is no better than inductive reasoning. In deductive reasoning the conclusion is uncertain because the premise (general statement) is uncertain. In inductive reasoning the conclusion is uncertain because it doesn't follow with certainty from the premise (specific example which is certain). Both of these "ductions" are essentially the same, they may have a different semantic context, but they are essentially the same. And it doesn't really matter, there is no problem, all knowledge is uncertain, everyone should know this, what we as humans do, is, essentially we collect statistics and we trust the statistics to reasonable extent, because that is all we can ever hope to do. If you think there is something else, it's almost certainly semantic.

In certain sense I would agree with Wittgenstein: "philosophy is just a byproduct of misunderstanding language".

Karl Popper argued (in my opinion correctly) that science does not use induction, and induction is in fact a myth. Instead, knowledge is created by conjecture and criticism. The main role of observations and experiments in science, he argued, is in attempts to criticize and refute existing theories. According to Popper, the problem of induction as usually conceived is asking the wrong question: it is asking how to justify theories given they cannot be justified by induction. Popper argued that justification is not needed at all, and seeking justification "begs for an authoritarian answer". Instead, Popper said, what should be done is to look to find and correct errors. Popper regarded theories that have survived criticism as better corroborated in proportion to the amount and stringency of the criticism, but, in sharp contrast to the inductivist theories of knowledge, emphatically as less likely to be true. Popper held that seeking for theories with a high probability of being true was a false goal that is in conflict with the search for knowledge. Science should seek for theories that are most probably false on the one hand (which is the same as saying that they are highly falsifiable and so there are lots of ways that they could turn out to be wrong), but still all actual attempts to falsify them have failed so far (that they are highly corroborated).

Might it be that in fact the only reason we still have academic theists at all is simply that they see a problem of induction where none exists?

(Appears the video has been removed, a sure sign of a small soul somewhere along the line.)

There is nothing in particular wrong with deductive reasoning as such, but the theists are just doing it wrong. The premises must be founded upon scientific evidence. They treat history etc. as if it was on par with science, but history is not science. History can never overturn what is possible, on the contrary, it rests on science and as such science can overturn anything that was considered historically probable. What these theists are missing, is a proper foundation of knowledge, the connection to reality.

Basically the premises of deduction are either based on induction or nothing at all. Therefore deduction can at best be on par with science and at worst it reduces to irrelevant speculation or otherwise incoherent bullshit.

Krauss has many nice comments about deduction...

"You can make definitions of things, I can try to figure how the universe works, I'll make progress and you'll sit here."

"Q: Mathematics is based on deduction... Krauss: No, it's based on evidence."

Of course the theists will end up stating that the criterion of falsifiability is not falsifiable which of course is totally irrelevant because it doesn't need to be. Basically because all knowledge which isn't fantasy is obtained through the scientific method and falsifiability. There are essentially no other methods of obtaining knowledge besides fantasy which is only semantic, then we can only do what we can. All claims regarding the reality are by nature uncertain because they are based on limited evidence and are empirical, on the other hand claims which are not empirical don't concern reality, so there you go.

Then they will say that atheism cannot be justified, but what they don't understand is that atheism is simply the rejection of claims which have not met their burden of proof, typically because the claims are not based on reality. And as Chrisopher Hitchens put it...

"What can be asserted without evidence can be dismissed without evidence."

I find this theistic quest for certainty through fantasy perverse. People should be honest about their limits, only then can one hope to gain more knowledge and understanding.

These debates are often plain red herrings (a logical fallacy when the argument is irrelevant). This is because even if the argument as a standalone element would be (semantically) valid, that meaning that the conclusion follows from the premises by the rules of logic, the argument is still invalid if we don't have valid reasons to believe the premises are true. The premises of these argument are often generalizations of some naive everyday reasoning which is applied in a context which is far beyond its validity domain, or simply cases where the premise doesn't have statistics to back it up, basically bullshit fantasy premises. This will of course result in bullshit fantasy arguments.

Much of the first cause bullshit has been debunked elsewhere, but what strikes me odd is that he says (or quotes at 14:35): "Clearly theists and atheists can agree on one thing, if anything exists at all, there must be something preceding it that always existed."

No, just no. As if this would go unchallenged. I for example disagree and therefore by definition the sentence is false. I find it equally possible that the finite universe with the big bang can just exist without having anything preceding it that always existed. In fact I find the whole notion of something eternally existing nonsense. It would have to be rigorously demonstrated that the premise is true or that even the idea is coherent at all. The way these people pull premises out of their asses and assume they are valid is preposterous.

What the hell is this guy talking about... Science can't prove ontological, moral or historical truths? Of course not, nothing besides semantics can ever be proven to full certainty. And moral truths, as far as we know, there are non. Historical truth? Only in a sense that history occurred, but we can't know for certain what happened. Shouldn't it be trivial that therefore historical truths can never be proven. Shouldn't it also be trivial to even a child at a fairly young age that we can never be absolutely certain of anything besides semantics that we personally use at that particular time, a category which is basically irrelevant in every way.

This is simply a result of the fact that everything we ever experience is simply an incomplete and finite experience, essentially an empirical thing. Having a circular semantic construct is hardly synonymous of justified knowledge, but that seems to be what many theists in academy would have us believe.

In fact if I were to guess, based on Gödel's incompleteness theorem, I would say it is foolish to think any theory or method of epistemology can ever perfectly justify or prove itself. However, I consider this irrelevant. If for no other reason, then due to the reason that in the end we have no choice, we must live our lives the way we can. The most certain truths are those which are closest to our consciousness, directly observable and which can directly be interacted with, basically our thoughts and our logic. Anything further, other people, the world, etc. are at a distance from us and less certain.

“If you can't change it why worry about it? If you can change it why worry about it?" - Zen Proverb

http://en.wikipedia.org/wiki/Deductive_reasoning

http://atheism.about.com/od/argumentsforgod/a/cosmological.htm

http://en.wikipedia.org/wiki/G%C3%B6del's_incompleteness_theorems

http://en.wikipedia.org/wiki/Falsifiability

--

Poor mans science and some funny correlations...

The way we typically measure correlations is by using an oscilloscope and comparing two time traces of voltage values obtained simultaneously, however oscilloscopes are expensive and especially when operating at microwave frequencies they are not necessarily the most efficient at doing this.

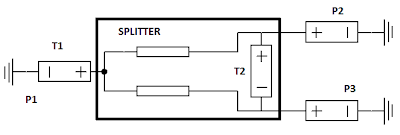

Instead of the voltage vs. time there is an equivalent way of representing the signal, this being the two quadratures, sine and cosine amplitudes at the measurement frequency. So we can do the correlation as depicted in the figure below.

Now there is one very nice and cheap way of doing this at home. One can buy two of these hacked USB HDTV sticks which use RTL2832u chips, they allow the IQ-values to be streamed on the fly to the computer. In effect this way of collecting data is in fact quite fast and one can collect over 700GB of data per day.

Slight hardware modification is needed though because the reference clock signals these sticks use come from separate crystals and the two crystals are never the same so without modification their phases rotate and the time when individual samples are taken relative to the each others drift resulting in broadened correlation peak like in the picture below, additionally one cannot determine the sign of the correlation unless the clock sources are the same because of uncertain phase.

I solved this problem by simply removing the crystal from one of the sticks and capacitively coupling the remaining crystal to the other stick. This seemed to work without problems. Additionally one could use a separate clock source to feed the 28.8 MHz reference to both of the sticks if one wishes to have common frequency reference for more devices.

There is an additional issue which needs to be handled (which is rather easy though), this being that the starting times of the data collection have a certain time shift. So before collecting data one needs to determine this offset, however after data collection has started it remains constant. Also the phase is randomized at the start of the data collection. However, the sticks drop no bits and maintain perfect synchronization of samples and phase indefinitely once they are started. What I do is that I calibrate the phase and offset with each measurement and then run indefinitely after that.

To test all of this, I was running some calibration of splitted thermal noise from several sources...

And what I saw was perhaps slightly weird... not actually though, but on initial impression not immediately intuitive. What we see in the picture below is cross correlation of the signal received by detectors A1 and A2 in two cases where the source temperature is at two different values. I will explain what these mean. Autocorr is just power, xcorr is the similarity between the signals. For opposite signals xcorr=-1 (normalized), for same signals xcorr=1, for random signals xcorr=0. From the image you should only look at the center, the other values indicate temporal shift and would signify temporal correlation should such exist, however in this case we are only interested in simultaneous correlation.

So the picture tells you that initially there is no correlation when the splitted signal is at room temperature and certain amount of power is received. In the second case I used a HEMT amplifier input as the source of the splitter, this is an interesting case because the input (gate) of the HEMT amplifier is electronically colder than room temperature (in this case around 50K whereas room temperature is 293K). Now one might naively think that in the case where the splitted source is at 293K one should receive certain amount of power and see certain amount of correlation. However we see no correlation whatsoever. In the second case however, when the splitted source is colder, we see less power than in the first case as we would expect, but now we see some correlation as well. How can that be?

There is perfectly good explanation to this though and it has to do with the way the splitter (Wilkinson) works. It is in fact the case that it is impossible to make an impedance matched ideal 3-port splitter which would not have a fourth channel in it. This means that in order to isolate P2 and P3 (in the following picture) there needs to exist another port (at T2). This port T2 is essentially a resistor which has perfect coupling to ports P2 and P3, but in opposite phases relative to the port P1 and since at thermal equilibrium the noises T1 and T2 are equal, but the sign from T2 is opposite you effectively get zero correlation between P2 and P3, only in the case where T1 is not the same as T2 you see correlation. Never the less you will see less power arrive at P2 and P3 when you use T1<T2 as one would expect. Should the port at T2 not exist, you would get correlation due to cross coupling of backaction noise from amplifiers at P2 and P3 so it is in fact necessary.

Well, perhaps this experiment or explanation is not the clearest to anyone expect one who works around this stuff, never the less, I know several people who find this interesting.

Of course I had a certain thought behind this, I was interesting in performing an experiment to see anti-bunching at cryogenic temperatures using a similar usecase with amplifiers, splitters and quadrature detectors, but using a qubit as a source of antibunched photons. In that case one should basically see correlation remain 0, but power go up. Though photon energy at this frequency is rather low relative to the noise temperature of these amplifiers and will require a large amount of averaging etc. These amplifiers have a noise temperature of 50K, now one may achieve a system noise temperature of around 5K by using cryogenic amplifiers. Quantum noise floor is at 50mK though at these frequencies so amplifier noise temperature is about a factor 100 from where we would ideally wish to be. Never the less, with suitable amount of averaging such measurements can be done.

...and correlation from 50K noise source in this case was very easy to measure. It's basically 1 second measurement on a tabletop.

One addition to the use of these sticks...

With each restart (which should be avoided) there appears to be, in addition to randomized phase and offset, an offset which effects the width of the correlation peak. This results in a need to calibrate the absolute value of the correlation amplitude relative to the channel power (there may be 30% variance or so I suppose). This "offset" is seen as width of the peak as see in the following picture in the upper left corner. Ideally this correlation peak is simply one point, but if the first case it seems to have some width which result in less than one-to-one mapping between the channel power and correlation value. In the first case in reality 100% cross correlation would only be seen as 70% cross correlation in the measurement, whereas in the second case real 100% cross correlation would be 100% cross correlation in the measurement as well. Notice though that channel powers are uneffected by this, as one would expect. Not a big problem though because one can calibrate against know signals. Also with suitable restart you can find a point which stays and shows 100% correlation always when it should.

Here's another plot where I logged the intensity correlation in addition to voltage correlation as well in the case where I switch the splitted input from a termination at equilibrium temperature to a termination at lower temperature. In the lower temperature case we see increased correlation both in voltage and intensity and lowered total power, in the equilibrium case there is higher power, but lower correlation.

#!/usr/bin/python

import thread

import socket

import os

import time

def collect(threadName, port):

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

s.connect(('127.0.0.1', port))

data = s.recv(1024*512)

x = 0

while 1:

try:

filename = '/run/shm/' + str(port) + '_' + str(x-100) + '.bin'

os.remove(filename)

except:

pass

filename = '/run/shm/' + str(port) + '_' + str(x) + '.bin'

f = open(filename, 'w')

data = s.recv(1024*512)

f.write(data)

f.flush()

f.close()

x = x + 1

s.close()

os.system("rm /run/shm/*.bin")

os.system("killall -9 rtl_tcp")

os.system("./usbreset /dev/bus/usb/002/002")

os.system("rtl_tcp -p 1001 -f 700e6 -d 0 -g -10 &")

os.system("rtl_tcp -p 1002 -f 700e6 -d 1 -g -10 &")

time.sleep(3)

thread.start_new_thread(collect, ("collect-1001", 1001))

thread.start_new_thread(collect, ("collect-1002", 1002))

while 1:

pass

import thread

import socket

import os

import time

def collect(threadName, port):

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

s.connect(('127.0.0.1', port))

data = s.recv(1024*512)

x = 0

while 1:

try:

filename = '/run/shm/' + str(port) + '_' + str(x-100) + '.bin'

os.remove(filename)

except:

pass

filename = '/run/shm/' + str(port) + '_' + str(x) + '.bin'

f = open(filename, 'w')

data = s.recv(1024*512)

f.write(data)

f.flush()

f.close()

x = x + 1

s.close()

os.system("rm /run/shm/*.bin")

os.system("killall -9 rtl_tcp")

os.system("./usbreset /dev/bus/usb/002/002")

os.system("rtl_tcp -p 1001 -f 700e6 -d 0 -g -10 &")

os.system("rtl_tcp -p 1002 -f 700e6 -d 1 -g -10 &")

time.sleep(3)

thread.start_new_thread(collect, ("collect-1001", 1001))

thread.start_new_thread(collect, ("collect-1002", 1002))

while 1:

pass

This is a really nice post. I thought of doing something similar.

ReplyDelete