Here I describe an approach to metaphysics based on what I call the principle of metaphysical discrimination. The goal of this principle is to focus on dividing phenomena into clear meaningful distinct categories based on the kinds of limits that can be expected to originate from the continuum between the observed nature of ones own existence and all other existence (further from self). Supernatural postulates like God, being void of predictive utility, are ignored.

We could start by trying to address the question of what is consciousness and how can it exist in a world which seemingly appears to be nothing but a Turing machine (at least to the first order), but that might be waste of time and has been more or less fruitlessly done many times before. Instead, let's start by taking for granted that something we call consciousness exists, we know this because that's us. Whichever way consciousness works, cosmos contains everything that is needed. Let us also note that there exists a kind of limitation we might call a private experience or a qualia which exists for all consciousness we are aware of. This limitation appears to prevent us from knowing what (if anything) it feels like to be someone else.

This sort of limitation resembles a phenomena of complementarity in quantum mechanics which in its simplest form is a limitation on the information any single observer can possess about certain pairs of physical observables such as position and momentum. In this context we can remain agnostic about whether these variables even have an exact simultaneous fundamental existence, and instead simply note that even if they do, the nature of our existence prevents us from ever gaining such knowledge.

The existence of this sort of complementarity in nature leads me to consider the possibility that perhaps private experience is simply another facet of all existence and ultimately no more mysterious than any other observation. Then perhaps postulating that "there is more to things" is just a kind of superstition and ultimately everything simply boils down to observing the kinds of things that exist. One could bring up the experience of time evolution of "now" as another mysterious aspect of consciousness, but time too might be nothing more than another dimension of existence.

If there in fact is nothing more to existence than existence itself (is there really an alternative?) then there can be no reason for existence. Asking for such a reason isn't even coherent, reasons are just correlations in "the set of all existence". In the spirit of Gödel's incompleteness theorems, it probably remains forever impossible to exactly prove such to be the case, but it never the less can be true and the most reasonable conclusion.

Saturday 1 December 2018

Thursday 22 November 2018

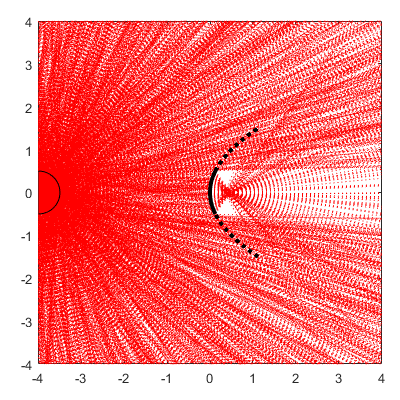

Conservation of etendue

Here's why you can't focus thermal light sources like the sun to a point which would get hotter than the emitting surface of the light source (omnidirectional thermal emitter). This would also violate the laws of thermodynamics. Here's what happens if you try...

...no arrangement of lenses, mirrors or any passive elements allows focusing beyond the surface temperature of the source.

Lenses and parabolic mirrors rather than purely focusing, make an image which has a minimum size that is proportional to the distance of the thermal source and the intensity lost to distance balances the sheets so that focusing alone can't result in hotter spot than the surface of the emitter.

So no matter how large a magnifying glass, even the size of earth, your spot won't get hotter than the surface of the sun. It's just equalization of temperature between two objects assisted by the magnifying glass in a way similar to bringing the objects closer to each other.

However, pure unidirectional emitters like lasers don't exactly have to obey this kind of limit. High temperature corresponds to high entropy and high disorder, whereas in terms of entropy, laser light is highly ordered, but unlike cold systems which are void of energy, laser is a kind of saturated form of energy from which energy can always flow away to increase the entropy of a typical thermodynamic system. Classical thermodynamic systems can't have this sort of state as their entropy can always increase. Only certain well isolated systems can be temporarily driven to this kind of saturated state by external source of energy.

Saturday 3 November 2018

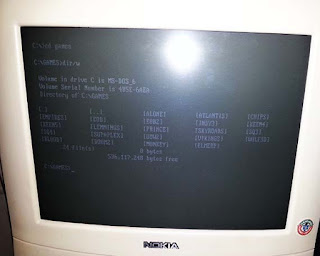

Small Broadcast Video Monitor for Retrogaming

Retrogaming is best experienced with CRTs. There's least amount of delay and timing and scanline reproduction is authentic. SVGA CRTs designed for 70 Hz would perhaps be the sharpest, but their phosphors are a bit too fast so they flicker with PAL (50 Hz) and NTSC (60 Hz) refresh rates a bit too much in addition to requiring at least line doubling. They don't natively support the TV-era 50/60 Hz 240p modes, but were instead operated in line doubled modes where the common game resolution of 320x200 (70 Hz) was actualy drawn as 320x400. However, some SVGA monitors can be operated at twice the refresh rate at 120 Hz and 240p giving in some ways the best result. This still isn't fully NTSC faithful as there's no way to draw the image without a frame buffer, but it never the less is good for image quality comparisons.

I recently acquired a Broadcast Video Monitor (a kind of high quality TV-style display) so I could authentically and natively view PAL/NTSC signals.

I recently acquired a Broadcast Video Monitor (a kind of high quality TV-style display) so I could authentically and natively view PAL/NTSC signals.

|

| Click to enlarge. Nokia 449Xi (15" >1000 line Trinitron) with analog VGA input (RGB). The displayed resolution is single scan 320x200 at 120 Hz. |

Standard (S)VGA monitors can not be used to faithfully reproduce games such as those of Nintendo Entertainment System due to incompatible signal standards (PAL/NTSC vs. VGA). NTSC having a 15.723 kHz horizontal sync rate vs. VGA 31.46875 kHz. NTSC needs to be either line doubled or vertical scan rate doubled (or both). Doubling the vertical refresh rate would be desirable, but at the same time needs an undesirable frame buffer that adds some delay.

Difference between textmode (80x25) in 240p (60 Hz) with Sony BVM and 400p (70 Hz) SVGA monitor. VGA textmode characters are 9x16 pixels whereas in 240p the characters consist of 8x8 pixels. These correspond to resolutions 640x200 and 720x400.

A few more 240p shots perhaps less commonly from DOS games at 240p.

The monitors.

The TSRs needed for 240p 60 Hz and 120 Hz output in DOS.

Monday 10 September 2018

Most new ideas are stupid and dangerous not unlike many old ideas, but some of the new ideas are vital and without them we're going to perish and die

The problem with reasons for causal things is that they're related to time, but causality is just an observation. Sticks don't come into existence at their tips. The tips are just an edge (of an object that exists). If the universe exists today, why would it exist tomorrow? What is the cause for the existence of tomorrow? No reason? Perhaps we should try to demonstrate that existence can never have a reason or more exactly that reasons for existence are ultimately nonsense. Then is the reason for the existence of consciousness also nonsense? Perhaps it too is just an observation about a thing that exist.

--

Why not just say that morality and values originate from our state of existence which is fundamentally arbitrary, but can be universal over a broad range of different societies to the extent that we humans share such conditions due to our evolutionary history.

--

https://codegolf.stackexchange.com/questions/11880/build-a-working-game-of-tetris-in-conways-game-of-life

--

Why not just say that morality and values originate from our state of existence which is fundamentally arbitrary, but can be universal over a broad range of different societies to the extent that we humans share such conditions due to our evolutionary history.

--

https://codegolf.stackexchange.com/questions/11880/build-a-working-game-of-tetris-in-conways-game-of-life

Saturday 1 September 2018

If you can't do the impossible, do the honorable

Often times when I sleep, I forget that there are painful and sometimes nearly impossible things I must eventually overcome or suffer what is to come. For a moment there I feel fine, but even in my sleep, I know that when I wake up, I will forever remember what's ahead. This is the state of my being, my every waking moment short of a few most intense minutes of the year. Every time I awaken from my amnesia, the memories hit me hard. It never goes away, and almost nothing affects it.

As time is running out, my faith in a future where I'm free from that fire is slowly fading away. Time itself has shown me, things I derive value from don't appear to be going my way. This is cause my true goals have always had poor prospects within my expected lifetime, but also because I've always had extremely hard time finding comparable value in anything else. The universe may hold unimaginable wonders, but that doesn't mean I wouldn't be stuck right where I am - until the end of time. Yet I go on.

As time is running out, my faith in a future where I'm free from that fire is slowly fading away. Time itself has shown me, things I derive value from don't appear to be going my way. This is cause my true goals have always had poor prospects within my expected lifetime, but also because I've always had extremely hard time finding comparable value in anything else. The universe may hold unimaginable wonders, but that doesn't mean I wouldn't be stuck right where I am - until the end of time. Yet I go on.

Your existence is not knowledge you’ve discovered by deduction or any other method of reasoning. Rather it is a state of affairs you keep observing.

Reddit had some funny questions two people should ask each other before getting married. I'm not getting married, but theoretically speaking...

How many kids do you want? 0, so many of the following answers are purely theoretical.

What values do you want to install in your children? Kindness, thoughtfulness, rationality, fairness, forgiveness, patience, honesty, stability, nature, health, sense of hope and direction.

How do you want to discipline your kids? I haven't though about it since I don't want to have any kids. Probably would have to figure out something that would make them understand clearly why they're being disciplined and something that is fair and proportionate.

What would you do if one of your children said he was homosexual? Nothing, I'm fine with homosexuality even if I'm heterosexual myself.

What if our children didn't want to go to college? That's up to them, but I suppose I might consider having failed as a father a bit. Depends on the specifics. Difficult to say.

How much say do children have in a family? Some.

How comfortable are you around children? Not at all.

Would you be opposed to having our parents watch the children so we can spend time alone together? Sounds nice, theoretically speaking that is.

Would you put your children in private or public school? Public, unless there was some strong reason not to.

What are your thoughts on homeschooling? I think there are benefits to both, but I think I would be in favor of the regular.

Would you be willing to adopt if we couldn't have kids? Theoretically yes, but if I wanted kids I think I'd still want my own kids primarily. I guess half of the "fun" would be to see how crazy they ended up being. Also there would be the research aspect and the project aspect.

Would you be willing to seek medical treatment if we couldn't have kids naturally? Certainly. If I wanted kids that it. Otherwise medical reasons for not being able to have kids might even be ideal to simplify sex life.

Do you believe it's okay to discipline your child in public? Preferably not, but if the circumstance demands it then I suppose.

How do you feel about paying for your kid's college education? Should the government not pay for it, I would probably think of it as our responsibility together. If my wife could't afford to pay for it I would probably attempt to pay for it myself.

How far apart do you want kids? If I wanted kids, I have no clue. Besides, I think one would be plenty.

Would you want someone to stay home with the kids or use daycare? I have no clue.

How would you feel if our kids wanted to join the military rather than go to college? Difficult to say. I'm not fundamentally opposed.

How involved do you want grandparents to be in our parenting? It might be nice to have them fairly involved. Difficult to say what I would think about such in reality.

How will we handle parental decisions? I have no idea.

----

Would you be willing to go to marriage counseling if we were having marital problems? Yes. Especially if it's financially reasonable.

If there is a disagreement between me and your family, whose side do you choose? Whomever I believe is right.

How do you handle disagreements? By prioritizing and negotiating.

Would you ever consider divorce? Yes. I intend to live forever and can't really see myself spending all of eternity with a single person anyway. Maybe 100 years if everything goes well, but not eternity and certainly not under every conceivable circumstance.

Would you rather discuss issues as they arise or wait until you have a few problems? As they arise, though I admit that I might look for comfort and ignore some things for a while.

How would you communicate you aren't satisfied sexually? Verbally if there is something to be gained.

What is the best way to handle disagreements in a marriage? By prioritizing and negotiating.

How can I be better about communicating with you? I don't know.

----

What are your views on infidelity? Should under no circumstance happen. Immediate grounds for termination.

What are your religious views on marriage? I have no religion. Marriage is irrelevant, but can be done for traditions/entertainments sake.

What's more important, work or family? Depends. I suppose family would be, but all in moderation.

What are your political views? No stupid bullshit. Mind your own business. The society is supposed to maintain infrastructure and act as an insurance against unpredictable and out of control events that affect individual life in undue way. Sustainable ecosystem is a top priority.

What are your views on birth control? An important thing that should without exception be trustworthy.

Would you rather be rich and miserable or poor and happy? I suppose most people would choose happy, but given it might be impossible for me to be happy and poor then perhaps something in between those two extremes. Neither miserable nor poor. Even if that leads to a situation where I feel nothing, that might end up being the best alternative. Preferably of course best of both worlds.

Who will make the biggest decisions of the household? We do.

What would you do if someone said something bad about me? Other than verbally defend you should you deserve it, nothing.

Would you follow the advice of your family before your spouse? Which even was more rational in a given situation.

What do you believe the role of a wife is? I don't believe in roles. The goal is happiness.

Who should do household chores? Whoever finds them necessary.

What do you believe the role of a husband is? I don't believe in roles. If we're happy, it's all good.

----

How do you feel about debt? I don't want any, but I suppose it's mandatory if one wants to buy a house. That's pretty much the only situation I'm willing to consider it.

Would you share all money with your spouse or split the money into different accounts? Split.

What are your views on saving money? There must always be savings that are enough to sustain current lifestyle for at least a year. Preferably there is a long term plan that will make us financially independent.

What are your views on spending money? A complex one, but quite rational.

What if we both want something but can't afford both? If it's not something we can't share there is no problem because we have separate accounts.

How well do you budget? Extremely.

Do you feel it is important to save for retirement? In some respects yes.

Would you be willing to get a second job if we had financial problems? Potentially.

Do you have any debt? No.

What if a family member wants to borrow a large sum of money? Generally speaking I'm not borrowing anyone any money unless I have significant excess.

Who will take care of the financial matters of the household? Both do their part.

----

What would you do if we fell out of love? Probably feel quite sad.

What are your career aspirations? Scientific research and financial independence as soon as possible after which I plan to spend my time doing financially independent research and in general stuff that interests me.

What would you like to be doing five or ten years from now? Travel the world and do independent research.

What do you think is the best way to keep the love alive in a marriage? I don't really know. I guess we'll just have to find out.

How do you think life will change if we got married? Hopefully in no particular manner.

What is the best thing about marriage? The cake and maybe the organ music.

What is the worst thing about marriage? The guests and the costs.

What is your idea of the best weekend? Climbing a mountain.

How important are wedding anniversaries to you? Not at all.

How would you like to spend special days? Doing something fun.

What kind of grandparent do you want to be someday? I don't, but if I did then I suppose the kind of person I could rely on and respect.

What type of house do you want to live in? Big, luxorious, at seashore, large private lot, nice view, no neighbours, surrounded by nature. Not too far from a big city.

What is your biggest fear about marriage? Change.

What excites you about getting married? Nothing in particular.

What do wedding rings mean to you? Nothing in particular.

Are you afraid to talk to me about anything? No.

What do you think would improve our relationship? I don't know.

What would be one thing you would change about our relationship? Hopefully nothing.

Do you have any doubts about the future of our relationship? Yes.

Do you believe love can pull you through anything? No.

Is there anything you don't trust about me? Probably plenty, but hopefully nothing critical. I don't trust anyone fully, including myself.

----

Which would you choose - dishes or laundry? Laundry.

Do you like pets? Not really. I like animals sometimes, but I wouldn't want to take care them.

How many pets do you want? 0. Unless we live on a big ranch and have plenty of people to take care of them. Then it might not matter if there are some animals hanging around.

What do you want to do during retirement? I'm not planning on retiring ever or alternatively I'm planning on retiring as soon as possible and doing what I please for the rest of my life. Maybe sail around the world, visit the moon, climb Everest or something similar.

At what age would you like to retire? If not never then this age.

How many kids do you want? 0, so many of the following answers are purely theoretical.

What values do you want to install in your children? Kindness, thoughtfulness, rationality, fairness, forgiveness, patience, honesty, stability, nature, health, sense of hope and direction.

How do you want to discipline your kids? I haven't though about it since I don't want to have any kids. Probably would have to figure out something that would make them understand clearly why they're being disciplined and something that is fair and proportionate.

What would you do if one of your children said he was homosexual? Nothing, I'm fine with homosexuality even if I'm heterosexual myself.

What if our children didn't want to go to college? That's up to them, but I suppose I might consider having failed as a father a bit. Depends on the specifics. Difficult to say.

How much say do children have in a family? Some.

How comfortable are you around children? Not at all.

Would you be opposed to having our parents watch the children so we can spend time alone together? Sounds nice, theoretically speaking that is.

Would you put your children in private or public school? Public, unless there was some strong reason not to.

What are your thoughts on homeschooling? I think there are benefits to both, but I think I would be in favor of the regular.

Would you be willing to adopt if we couldn't have kids? Theoretically yes, but if I wanted kids I think I'd still want my own kids primarily. I guess half of the "fun" would be to see how crazy they ended up being. Also there would be the research aspect and the project aspect.

Would you be willing to seek medical treatment if we couldn't have kids naturally? Certainly. If I wanted kids that it. Otherwise medical reasons for not being able to have kids might even be ideal to simplify sex life.

Do you believe it's okay to discipline your child in public? Preferably not, but if the circumstance demands it then I suppose.

How do you feel about paying for your kid's college education? Should the government not pay for it, I would probably think of it as our responsibility together. If my wife could't afford to pay for it I would probably attempt to pay for it myself.

How far apart do you want kids? If I wanted kids, I have no clue. Besides, I think one would be plenty.

Would you want someone to stay home with the kids or use daycare? I have no clue.

How would you feel if our kids wanted to join the military rather than go to college? Difficult to say. I'm not fundamentally opposed.

How involved do you want grandparents to be in our parenting? It might be nice to have them fairly involved. Difficult to say what I would think about such in reality.

How will we handle parental decisions? I have no idea.

----

Would you be willing to go to marriage counseling if we were having marital problems? Yes. Especially if it's financially reasonable.

If there is a disagreement between me and your family, whose side do you choose? Whomever I believe is right.

How do you handle disagreements? By prioritizing and negotiating.

Would you ever consider divorce? Yes. I intend to live forever and can't really see myself spending all of eternity with a single person anyway. Maybe 100 years if everything goes well, but not eternity and certainly not under every conceivable circumstance.

Would you rather discuss issues as they arise or wait until you have a few problems? As they arise, though I admit that I might look for comfort and ignore some things for a while.

How would you communicate you aren't satisfied sexually? Verbally if there is something to be gained.

What is the best way to handle disagreements in a marriage? By prioritizing and negotiating.

How can I be better about communicating with you? I don't know.

----

What are your views on infidelity? Should under no circumstance happen. Immediate grounds for termination.

What are your religious views on marriage? I have no religion. Marriage is irrelevant, but can be done for traditions/entertainments sake.

What's more important, work or family? Depends. I suppose family would be, but all in moderation.

What are your political views? No stupid bullshit. Mind your own business. The society is supposed to maintain infrastructure and act as an insurance against unpredictable and out of control events that affect individual life in undue way. Sustainable ecosystem is a top priority.

What are your views on birth control? An important thing that should without exception be trustworthy.

Would you rather be rich and miserable or poor and happy? I suppose most people would choose happy, but given it might be impossible for me to be happy and poor then perhaps something in between those two extremes. Neither miserable nor poor. Even if that leads to a situation where I feel nothing, that might end up being the best alternative. Preferably of course best of both worlds.

Who will make the biggest decisions of the household? We do.

What would you do if someone said something bad about me? Other than verbally defend you should you deserve it, nothing.

Would you follow the advice of your family before your spouse? Which even was more rational in a given situation.

What do you believe the role of a wife is? I don't believe in roles. The goal is happiness.

Who should do household chores? Whoever finds them necessary.

What do you believe the role of a husband is? I don't believe in roles. If we're happy, it's all good.

----

How do you feel about debt? I don't want any, but I suppose it's mandatory if one wants to buy a house. That's pretty much the only situation I'm willing to consider it.

Would you share all money with your spouse or split the money into different accounts? Split.

What are your views on saving money? There must always be savings that are enough to sustain current lifestyle for at least a year. Preferably there is a long term plan that will make us financially independent.

What are your views on spending money? A complex one, but quite rational.

What if we both want something but can't afford both? If it's not something we can't share there is no problem because we have separate accounts.

How well do you budget? Extremely.

Do you feel it is important to save for retirement? In some respects yes.

Would you be willing to get a second job if we had financial problems? Potentially.

Do you have any debt? No.

What if a family member wants to borrow a large sum of money? Generally speaking I'm not borrowing anyone any money unless I have significant excess.

Who will take care of the financial matters of the household? Both do their part.

----

What would you do if we fell out of love? Probably feel quite sad.

What are your career aspirations? Scientific research and financial independence as soon as possible after which I plan to spend my time doing financially independent research and in general stuff that interests me.

What would you like to be doing five or ten years from now? Travel the world and do independent research.

What do you think is the best way to keep the love alive in a marriage? I don't really know. I guess we'll just have to find out.

How do you think life will change if we got married? Hopefully in no particular manner.

What is the best thing about marriage? The cake and maybe the organ music.

What is the worst thing about marriage? The guests and the costs.

What is your idea of the best weekend? Climbing a mountain.

How important are wedding anniversaries to you? Not at all.

How would you like to spend special days? Doing something fun.

What kind of grandparent do you want to be someday? I don't, but if I did then I suppose the kind of person I could rely on and respect.

What type of house do you want to live in? Big, luxorious, at seashore, large private lot, nice view, no neighbours, surrounded by nature. Not too far from a big city.

What is your biggest fear about marriage? Change.

What excites you about getting married? Nothing in particular.

What do wedding rings mean to you? Nothing in particular.

Are you afraid to talk to me about anything? No.

What do you think would improve our relationship? I don't know.

What would be one thing you would change about our relationship? Hopefully nothing.

Do you have any doubts about the future of our relationship? Yes.

Do you believe love can pull you through anything? No.

Is there anything you don't trust about me? Probably plenty, but hopefully nothing critical. I don't trust anyone fully, including myself.

----

Which would you choose - dishes or laundry? Laundry.

Do you like pets? Not really. I like animals sometimes, but I wouldn't want to take care them.

How many pets do you want? 0. Unless we live on a big ranch and have plenty of people to take care of them. Then it might not matter if there are some animals hanging around.

What do you want to do during retirement? I'm not planning on retiring ever or alternatively I'm planning on retiring as soon as possible and doing what I please for the rest of my life. Maybe sail around the world, visit the moon, climb Everest or something similar.

At what age would you like to retire? If not never then this age.

Monday 18 June 2018

Since neither democracy nor authoritarianism really works...

Perhaps representatives should be selected by random number generator as some kind of fair sampling of the population. If the person declines, just draw another one until a suitable number is met.

Never allow a single person to make any decisions, but instead have all decisions made by some set of representatives which are shuffled every now and them. To guarantee a majority decision, always use odd number of representatives.

Bonus principle: after every term let's select by random 50% of the representatives for the next term so the system may also utilize experience to certain degree (hopefully healthy degree).

TV-series I've recently enjoyed:

12 Monkeys

The Crossing

Marvels Agents of S.H.I.E.L.D.

Designated Survivor

Helix

Van Helsing

Blacklist

Never allow a single person to make any decisions, but instead have all decisions made by some set of representatives which are shuffled every now and them. To guarantee a majority decision, always use odd number of representatives.

Bonus principle: after every term let's select by random 50% of the representatives for the next term so the system may also utilize experience to certain degree (hopefully healthy degree).

--

TV-series I've recently enjoyed:

12 Monkeys

The Crossing

Marvels Agents of S.H.I.E.L.D.

Designated Survivor

Helix

Van Helsing

Blacklist

Friday 15 June 2018

It's often better to do nothing at all than to take two steps back an only one step forward

According to the Holographic principle the number of "bits" associated with a horizon (such as the event horizon of a black hole or Hubble horizon of the observable universe where objects recede from us at the speed of light due to the expansion of space and which also limits the patch that is causally connected to us in the cosmos) is proportional to the area of the horizon (in Planck units).

If one assumes the total energy of such patch of cosmos is distributed evenly among the horizon degrees of freedom according to the thermodynamic equipartition theorem, there exists a corresponding force as a result of the tendency of the universe to increase its entropy (second law of thermodynamics). This mechanism may be responsible for both the tradiational forms of gravity and the cosmological constant.

Already a fairly naive calculation finds that the value of the cosmological constant (associated with dark energy) would be in the correct ballpark respect to the observations. If this mechanism is real then there might be no need for any special dark energy.

T = 13.8e9; % current age of the universe (in years)

G = 6.674e-11;

c = 299792458;

hbar = 1.0546e-34;

ly = 9.4607e15; % lightyear (in meters)

lp = sqrt(hbar*G/c^3); % Planck length

R = T*ly % radius of causally connected universe (in meters) or c/H (Hubble horizon)

r = R/lp % radius in Planck lengths

N = pi*r^2 % number of bits associated with the Hubble horizon

% btw. this value of N is approx. 2^407 which when interpreted (kind of) classically

% suggests that no quantum computer with more than 407 ideal qubits will work as expected

% but if these are qubits instead of bits then no such conclusion is true

% Schwarzschild radius of the Hubble sphere: rs = 2*G*M/c^2 =>

M = R*c^2/2/G % SI units

m = M/sqrt(hbar*c/G); % in Planck units

% [arXiv:1001.0785v1]

% equipartition of energy among the horizon degrees of freedom: 0.5*kT = m/(pi*r^2)

kT = m/(pi*r^2)/0.5

% entropic force F = kT * gradient(N) =>

F = kT*2*pi*r;

% Second Friedmann equation: a''/a = -4*pi*(rho/3 + p) + Lambda/3

% Cosmic expansion: r''/r = F/(mr)

F/(m*r) % ~ 6e-122 so we are in the correct ballpark [arXiv:1105.3105v1]

4/r^2 % m is actually irrelevant if you model the universe this way, but

% you could also just take the directly observed values and arrive at similar lambda

% additionally by combining the Unruh temperature T = a*hbar/(2*pi*c*k),

% Newton's second law F = ma and area of holographic surface A = 4*pi*r^2,

% one arrives at the Newton's law of universal gravitation F = GmM/r^2

If one assumes the total energy of such patch of cosmos is distributed evenly among the horizon degrees of freedom according to the thermodynamic equipartition theorem, there exists a corresponding force as a result of the tendency of the universe to increase its entropy (second law of thermodynamics). This mechanism may be responsible for both the tradiational forms of gravity and the cosmological constant.

Already a fairly naive calculation finds that the value of the cosmological constant (associated with dark energy) would be in the correct ballpark respect to the observations. If this mechanism is real then there might be no need for any special dark energy.

T = 13.8e9; % current age of the universe (in years)

G = 6.674e-11;

c = 299792458;

hbar = 1.0546e-34;

ly = 9.4607e15; % lightyear (in meters)

lp = sqrt(hbar*G/c^3); % Planck length

R = T*ly % radius of causally connected universe (in meters) or c/H (Hubble horizon)

r = R/lp % radius in Planck lengths

N = pi*r^2 % number of bits associated with the Hubble horizon

% btw. this value of N is approx. 2^407 which when interpreted (kind of) classically

% suggests that no quantum computer with more than 407 ideal qubits will work as expected

% but if these are qubits instead of bits then no such conclusion is true

% Schwarzschild radius of the Hubble sphere: rs = 2*G*M/c^2 =>

M = R*c^2/2/G % SI units

m = M/sqrt(hbar*c/G); % in Planck units

% [arXiv:1001.0785v1]

% equipartition of energy among the horizon degrees of freedom: 0.5*kT = m/(pi*r^2)

kT = m/(pi*r^2)/0.5

% entropic force F = kT * gradient(N) =>

F = kT*2*pi*r;

% Second Friedmann equation: a''/a = -4*pi*(rho/3 + p) + Lambda/3

% Cosmic expansion: r''/r = F/(mr)

F/(m*r) % ~ 6e-122 so we are in the correct ballpark [arXiv:1105.3105v1]

4/r^2 % m is actually irrelevant if you model the universe this way, but

% you could also just take the directly observed values and arrive at similar lambda

% additionally by combining the Unruh temperature T = a*hbar/(2*pi*c*k),

% Newton's second law F = ma and area of holographic surface A = 4*pi*r^2,

% one arrives at the Newton's law of universal gravitation F = GmM/r^2

Friday 8 June 2018

Some speculation on indeterministic experience in deterministic cosmos

The state of the observable universe is described accoring to the Bekenstein bound by the information contained in less than 10^123 bits (or qubits depending on your preferred interpretation). This particular number makes the corresponding wavefunction of the observable universe 10^123-dimensional (pi*c^3*r^2/G/hbar/log(2)). While that's huge, it's still only finite even if the cosmos itself might be infinite.

For an observer at the very edge of our observable universe, their observable universe is described by a different set of bits compared to ours. As we are still causally connected, we share some of those bits, but not all. Their Hubble sphere (causally connected part of space limited by the expansion of space at the speed of light 13.8 billion light years from here) is different from ours, yet they overlap partially. This is to some degree true for all observers so if one wanted to be poetic, one could say we share reality with other people, but our realities are not the same.

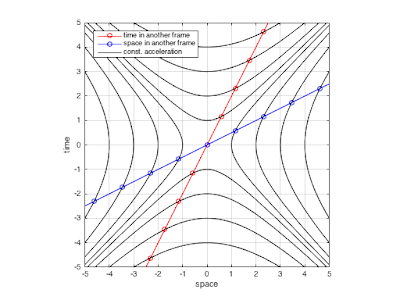

We may speculate that some of the bits constituting the observers Hubble sphere may be changed by accelerating. Or perhaps one should say what constitutes their Hubble sphere can move or change. A kind of corresponding phenomenon is called the Unruh-effect which causes accelerating observers to observe (thermal) radiation in vacuum where none used to be. There are a number of ways to justify this. One is to look at the Minkowski diagram of an accelerating observer and notice that there exists a (Rindler) horizon that separates a causally disconnected region of space from them and this leads to an effect that is essentially the same as Hawking radiation of a black hole. Another way is to consider what would happen to vacuum fluctuations under acceleration and notice they gain energy. Something similar happens in the dynamical Casimir effect.

However, on some very general level one might also think that this sort of radiation originates from outside of their initially causally connected region of space. If this is the case, then it is not surprising that nature of such fluctuations appear indeterministic to the observer.

Quantum indeterminism may be a result of previously causally disconnected information getting mixed into our reality in certain types of phenomena like acceleration. These types of phenomena are closely related to entanglement and according to ER=EPR conjecture the existence of space is basically a result of entanglement. This would suggest that entanglement is also related to expansion of the universe, the cosmological constant and dark energy. It would be kind of nice to get rid of indeterminism and dark energy all at once.

https://arxiv.org/pdf/1803.10454.pdf

Finally getting data that might assist with quantum gravity?

One may think one culture is superior to others even if it's shit. It's just less shit compared to the others (one is aware of). At least that's what I mostly think. Generally speaking I'm not a big fan of any culture. I'd like to think it's more important that a society is governed by scientific thinking, analytical problem solving and progressive explorative goals which aim to maximize personal freedom, physical well-being and long term sustainability.

Freedom of Speech: The right to tell people what they don't want to hear. Unfortunately this view doesn't seem particularly popular these days. Short of lying about personally harmful information, I'm pretty much in favor of absolute freedom of speech without exceptions.

For an observer at the very edge of our observable universe, their observable universe is described by a different set of bits compared to ours. As we are still causally connected, we share some of those bits, but not all. Their Hubble sphere (causally connected part of space limited by the expansion of space at the speed of light 13.8 billion light years from here) is different from ours, yet they overlap partially. This is to some degree true for all observers so if one wanted to be poetic, one could say we share reality with other people, but our realities are not the same.

We may speculate that some of the bits constituting the observers Hubble sphere may be changed by accelerating. Or perhaps one should say what constitutes their Hubble sphere can move or change. A kind of corresponding phenomenon is called the Unruh-effect which causes accelerating observers to observe (thermal) radiation in vacuum where none used to be. There are a number of ways to justify this. One is to look at the Minkowski diagram of an accelerating observer and notice that there exists a (Rindler) horizon that separates a causally disconnected region of space from them and this leads to an effect that is essentially the same as Hawking radiation of a black hole. Another way is to consider what would happen to vacuum fluctuations under acceleration and notice they gain energy. Something similar happens in the dynamical Casimir effect.

However, on some very general level one might also think that this sort of radiation originates from outside of their initially causally connected region of space. If this is the case, then it is not surprising that nature of such fluctuations appear indeterministic to the observer.

Quantum indeterminism may be a result of previously causally disconnected information getting mixed into our reality in certain types of phenomena like acceleration. These types of phenomena are closely related to entanglement and according to ER=EPR conjecture the existence of space is basically a result of entanglement. This would suggest that entanglement is also related to expansion of the universe, the cosmological constant and dark energy. It would be kind of nice to get rid of indeterminism and dark energy all at once.

--

https://arxiv.org/pdf/1803.10454.pdf

Finally getting data that might assist with quantum gravity?

--

One may think one culture is superior to others even if it's shit. It's just less shit compared to the others (one is aware of). At least that's what I mostly think. Generally speaking I'm not a big fan of any culture. I'd like to think it's more important that a society is governed by scientific thinking, analytical problem solving and progressive explorative goals which aim to maximize personal freedom, physical well-being and long term sustainability.

Freedom of Speech: The right to tell people what they don't want to hear. Unfortunately this view doesn't seem particularly popular these days. Short of lying about personally harmful information, I'm pretty much in favor of absolute freedom of speech without exceptions.

--

I think it is ok for authors (please let's not call them creators, they are not gods) to ask for money for copies of their works (please let's not devalue these works by calling them content) in order to gain income (the term compensation falsely implies it is a matter of making up for some kind of damages).

— Richard Stallman

--

I wonder if I'd get more done if I were rich... oh well, I guess we'll never know.

Thursday 17 May 2018

Government secrets are a threat to democracy

How PAL television carried color information...

visual = 52e-6; % visible line is 52 microseconds

t = linspace(0, visual, 768); % horizontal

f = 4.43361875e6; % color carrier

% instead of RGB, intensity Y and color information U and V was transmitted

% coefficients were chosen based on sensitivity of human eye

% coefficients were chosen based on sensitivity of human eye

Y(y, :) = 0.299*R(y, :) + 0.587*G(y, :) + 0.114*B(y, :);

U(y, :) = 0.493*B(y, :) - 0.493*Y(y, :);

V(y, :) = 0.877*R(y, :) - 0.877*Y(y, :);

% Phase Alternating Line

s = (1-2*mod(y,2));

% IQ-modulated color signal was added to the intensity signal

I(y, :) = Y(y, :) + U(y, :).*sin(2*pi*f*t) + s*V(y, :).*cos(2*pi*f*t);

% color could be easily recovered from I

g = sin(2*pi*f*t);

h = cos(2*pi*f*t);

N = 2; % even with N this small the result is rather good

for x = N+1:768-(N+1)

U(y, 1+x) = mean(g(x-N:x+N).*I(y, x-N:x+N));

V(y, 1+x) = mean(sig*h(x-N:x+N).*I(y, x-N:x+N));

end

--

Sunday 22 April 2018

Softcore CPU, assembler and xorshift pseudorandom on an FPGA

I thought it might be nice to make some kind of simplistic 32-bit softcore CPU using an FPGA. The most natural opcodes (for me) would correspond to common C operations such arithmetic +, -, *, /, %, =, comparisons (and conditional jumps) <, >, <=, >=, !=, == and bit operations &, |, ^, ~, <<, >>. This is why I decided to call it CCPU. A simple assembler was written using C, a softcore CPU using verilog and a xorshift pseudorandom program using assembler.

--

0

MOV

A, 1 ;

this program will do xorshift32

2

MOV

B, 0 ;

displayed as noise on CCPUs VGA out

4

MOV

C, 1

6

MOV

D, 13

8

MOV

E, 17

10

MOV

F, 5

12

MOV

H, 256

14

MOV

I, 64000

16

SHL

G, A, D

17

XOR

A, A, G ;

A ^= A << 13

18

SHR

G, A, E

19

XOR

A, A, G ;

A ^= A >> 17

20

SHL

G, A, F

21

XOR

A, A, G ;

A ^= A << 5

22

ADD

B, B, C ;

B = B + 1

23

STO

B, A ;

MEM[B] = A

24

JL

B, I, 16 ;

if(B==64000) goto 16;

26

JMP

2 ;

goto 2;

--

module

xorshift(input

clock, output

VGA_HS, VGA_VS, VGA_B);

wire

cpu_clock;

wire

pixel_clock;

/*

VGA 640x400 70Hz */

parameter

H =

640; /*

width of visible area */

parameter

HFP =

16;

/*

unused time before hsync */

parameter

HS =

96; /* width of hsync

*/

parameter

HBP =

48;

/* unused time after hsync

*/

parameter

V =

400; /* height of visible area

*/

parameter

VFP =

12;

/* unused time before vsync

*/

parameter

VS =

2; /* width of vsync

*/

parameter

VBP =

35;

/* unused time after vsync

*/

reg

[9:0]

h_cnt; /* horizontal pixel counter

*/

reg

[9:0]

v_cnt; /* vertical pixel counter

*/

reg

hs, vs, pixel;

reg

vmem [320*200-1:0];

reg

[15:0]

video_counter;

/*

horizontal pixel counter */

always@(posedge

pclk)

begin

if(h_cnt

==

H+HFP+HS+HBP-1)

h_cnt <=

0;

else

h_cnt <=

h_cnt +

1;

if(h_cnt

==

H+HFP) hs <=

0;

if(h_cnt

==

H+HFP+HS) hs <=

1;

end

/*

vertical pixel counter */

always@(posedge

pclk)

begin

if(h_cnt

==

H+HFP)

begin

if(v_cnt

==

VS+VBP+V+VFP-1)

v_cnt <=

0;

else

v_cnt <=

v_cnt +

1;

if(v_cnt

==

V+VFP) vs <=

1;

if(v_cnt

==

V+VFP+VS) vs <=

0;

end

end

/*

RAMDAC */

always@(posedge

pclk)

begin

if((v_cnt

<

V) &&

(h_cnt <

H))

begin

if(h_cnt[0]

==

1)

video_counter

<=

video_counter +

1;

pixel

<=

vmem[video_counter];

end

else

begin

if(h_cnt

==

H+HFP)

begin

if(v_cnt

==

V+VFP)

video_counter

<=

0;

else

if((v_cnt

<

V) &&

(v_cnt[0] !=

1))

video_counter

<=

video_counter -

320;

end

pixel

<=

0;

end

end

assign

VGA_HS =

hs;

assign

VGA_VS =

vs;

assign

VGA_B =

pixel;

/*

VGA */

reg

[31:0]

mem[255:0];

reg

[31:0]

D;

reg

[31:0]

pc;

reg

[31:0]

cnt;

reg

[31:0]

r[31:0],

data;

reg

[7:0]

o, a, b, c;

reg

pclk, cclk;

initial

$readmemh("ram.hex",

mem);

initial

begin

pc

=

0;

o

=

0;

a

=

0;

b

=

0;

c

=

0;

end

always

@(posedge

clock)

begin

cnt

<=

cnt +

1;

pclk

<=

~pclk;

/* 25 MHz */

cclk

<=

~cclk;

end

always

@(posedge

cpu_clock)

begin

D

<=

mem[pc];

o

<=

D[31:24];

a

<=

D[23:16];

b

<=

D[15:8];

c

<=

D[7:0];

end

always

@(negedge

cpu_clock)

begin

data

<=

mem[pc+1];

case(o)

1:begin

/* JMP */

pc

<=

data;

end

6:begin

/* JL */

if(r[a]<r[b])

pc

<=

data;

else

pc

<=

pc +

1;

end

8:begin

/* MOV */

r[a]

<=

data;

pc

<=

pc +

2;

end

11:begin

/* STO */

vmem[r[a]]

<=

r[b];

pc

<=

pc +

1;

end

12:begin

/* SHL */

r[a]

<=

r[b] <<

r[c];

pc

<=

pc +

1;

end

13:begin

/* SHR */

r[a]

<=

r[b] >>

r[c];

pc

<=

pc +

1;

end

17:begin

/* XOR */

r[a]

<=

r[b] ^

r[c];

pc

<=

pc +

1;

end

18:begin

/* ADD */

r[a]

<=

r[b] +

r[c];

pc

<=

pc +

1;

end

endcase

end

assign

pixel_clock =

pclk;

assign

cpu_clock =

cclk;

endmodule

--

#include <stdio.h>

#include <stdlib.h>

/* Branching */

#define NOP 0

#define JMP 1

#define JE 2

#define JNE 3

#define JG 4

#define JGE 5

#define JL 6

#define JLE 7

/* Loading */

#define MOV 8

#define LDA 10

#define STO 11

/* Logic */

#define SHL 12

#define SHR 13

#define NOT 14

#define AND 15

#define OR 16

#define XOR 17

/* Arithmetic */

#define ADD 18

int isOpcode(char *opc, char *cmp) {

int i, j = 0;

for(i=0; i<3; i++)

if(opc[i] == cmp[i])

j++;

if(j==3)

return 1;

else

return 0;

}

int main() {

FILE *in = fopen("xorshift.asm", "r");

FILE *out = fopen("ram.bin", "wb");

FILE *outh = fopen("ram.hex", "w");

unsigned char buf[256], nbuf[64], op[3], regs[3];

int i, j, k, line = 0;

while(fgets(buf, 256, in)!=NULL) {

/* find opcode */

for(i=0; i<3; i++)

op[i] = buf[i];

for(i=0; i<64; i++)

nbuf[i] = 0;

i = 3;

j = 0;

while(buf[i]!='\n' && buf[i]!=';') {

if(buf[i]>47 && buf[i]<58)

nbuf[j++] = buf[i];

i++;

}

j = atoi(nbuf);

/* find registers */

i = 3;

k = 0;

while(buf[i]!='\n' && buf[i]!=';') {

if(buf[i]>64 && buf[i]<91)

regs[k++] = buf[i]-'A';

i++;

}

/* handle opcodes */

if(isOpcode(op, "NOP")) {

i = (NOP<<24);

fwrite(&i, 4, 1, out);

printf("%d\tNOP\n", line);

fprintf(outh, "%.8x\n", &i);

line += 1;

}

if(isOpcode(op, "JMP")) {

i = JMP<<24;

fwrite(&i, 4, 1, out);

fwrite(&j, 4, 1, out);

printf("%d\tJMP %d\n", line, j):

fprintf(outh, "%.8x\n", i);

fprintf(outh, "%.8x\n", j);

line += 2;

}

if(isOpcode(op, "JE ")) {

i = (JE<<24)+(regs[0]<<16)+(regs[1]<<8);

fwrite(&i, 4, 1, out);

fwrite(&j, 4, 1, out);

printf("%d\tJE %c, %c, %d\n", line, regs[0]+'A', regs[1]+'A', j);

fprintf(outh, "%.8x\n", i);

fprintf(outh, "%.8x\n", j);

line += 2;

}

if(isOpcode(op, "JNE")) {

i = (JNE<<24)+(regs[0]<<16)+(regs[1]<<8);

fwrite(&i, 4, 1, out);

fwrite(&j, 4, 1, out);

printf("%d\tJNE %c, %c, %d\n", line, regs[0]+'A', regs[1]+'A', j);

fprintf(outh, "%.8x\n", i);

fprintf(outh, "%.8x\n", j);

line += 2;

}

if(isOpcode(op, "JG ")) {

i = (JG<<24)+(regs[0]<<16)+(regs[1]<<8);

fwrite(&i, 4, 1, out);

fwrite(&j, 4, 1, out);

printf("%d\tJG %c, %c, %d\n", line, regs[0]+'A', regs[1]+'A', j);

fprintf(outh, "%.8x\n", i);

fprintf(outh, "%.8x\n", j);

line += 2;

}

if(isOpcode(op, "JGE")) {

i = (JGE<<24)+(regs[0]<<16)+(regs[1]<<8);

fwrite(&i, 4, 1, out);

fwrite(&j, 4, 1, out);

printf("%d\tJGE %c, %c, %d\n", line, regs[0]+'A', regs[1]+'A',j);

fprintf(outh, "%.8x\n", i);

fprintf(outh, "%.8x\n", j);

line += 2;

}

if(isOpcode(op, "JL ")) {

i = (JL<<24)+(regs[0]<<16)+(regs[1]<<8);

fwrite(&i, 4, 1, out);

fwrite(&j, 4, 1, out);

printf("%d\tJL %c, %c, %d\n", line, regs[0]+'A', regs[1]+'A', j);

fprintf(outh, "%.8x\n", i);

fprintf(outh, "%.8x\n", j);

line += 2;

}

if(isOpcode(op, "JLE")) {

i = (JLE<<24)+(regs[0]<<16)+(regs[1]<<8);

fwrite(&i, 4, 1, out);

fwrite(&j, 4, 1, out);

printf("%d\tJLE %c, %c, %d\n", line, regs[0]+'A', regs[1]+'A', j);

fprintf(outh, "%.8x\n", i);

fprintf(outh, "%.8x\n", j);

line += 2;

}

if(isOpcode(op, "MOV")) {

i = (MOV<<24)+(regs[0]<<16);

fwrite(&i, 4, 1, out);

fwrite(&j, 4, 1, out);

printf("%d\tMOV %c, %d\n", line, regs[0]+'A', j);

fprintf(outh, "%.8x\n", i);

fprintf(outh, "%.8x\n", j);

line += 2;

}

if(isOpcode(op, "LDA")) {

i = (LDA<<24)+(regs[0]<<16)+(regs[1]<<8);

fwrite(&i, 4, 1, out);

printf("%d\tLDA %c, %c\n", line, regs[0]+'A', regs[1]+'A');

fprintf(outh, "%.8x\n", i);

line += 1;

}

if(isOpcode(op, "STO")) {

i = (STO<<24)+(regs[0]<<16)+(regs[1]<<8);

fwrite(&i, 4, 1, out);

printf("%d\tSTO %c, %c\n", line, regs[0]+'A', regs[1]+'A');

fprintf(outh, "%.8x\n", i);

line += 1;

}

if(isOpcode(op, "SHL")) {

i = (SHL<<24)+(regs[0]<<16)+(regs[1]<<8)+regs[2];

fwrite(&i, 4, 1, out);

printf("%d\tSHL %c, %c, %c\n", line, regs[0]+'A', regs[1]+'A', regs[2]+'A');

fprintf(outh, "%.8x\n", i);

line += 1;

}

if(isOpcode(op, "SHR")) {

i = (SHR<<24)+(regs[0]<<16)+(regs[1]<<8)+regs[2];

fwrite(&i, 4, 1, out);

printf("%d\tSHR %c, %c, %c\n", line, regs[0]+'A', regs[1]+'A', regs[2]+'A');

fprintf(outh, "%.8x\n", i);

line += 1;

}

if(isOpcode(op, "NOT")) {

i = (NOT<<24)+(regs[0]<<16)+(regs[1]<<8);

fwrite(&i, 4, 1, out);

printf("%d\tNOT %c, %c\n", line, regs[0]+'A', regs[1]+'A');

fprintf(outh, "%.8x\n", i);

line += 1;

}

if(isOpcode(op, "AND")) {

i = (AND<<24)+(regs[0]<<16)+(regs[1]<<8)+regs[2];

fwrite(&i, 4, 1, out);

printf("%d\tAND %c, %c, %c\n", line, regs[0]+'A', regs[1]+'A', regs[2]+'A');

fprintf(outh, "%.8x\n", i);

line += 1;

}

if(isOpcode(op, "OR ")) {

i = (OR<<24)+(regs[0]<<16)+(regs[1]<<8)+regs[2];

fwrite(&i, 4, 1, out);

printf("%d\tOR %c, %c, %c\n", line, regs[0]+'A', regs[1]+'A', regs[2]+'A');

fprintf(outh, "%.8x\n", i);

line += 1;

}

if(isOpcode(op, "XOR")) {

i = (XOR<<24)+(regs[0]<<16)+(regs[1]<<8)+regs[2];

fwrite(&i, 4, 1, out);

printf("%d\tXOR %c, %c, %c\n", line, regs[0]+'A', regs[1]+'A', regs[2]+'A');

fprintf(outh, "%.8x\n", i);

line += 1;

}

if(isOpcode(op, "ADD")) {

i = (ADD<<24)+(regs[0]<<16)+(regs[1]<<8)+regs[2];

fwrite(&i, 4, 1, out);

printf("%d\tADD %c, %c, %c\n", line, regs[0]+'A', regs[1]+'A', regs[2]+'A');

fprintf(outh, "%.8x\n", i);

line += 1;

}

}

fflush(out);

fflush(outh);

fclose(in);

fclose(out);

fclose(outh);

}

Subscribe to:

Posts (Atom)